Here’s the more technical explanation of prototype 2. I describe the modules or, metaphorically, “neural clusters” that information flows through. For the Elixir-curious, I describe some of the Elixir features I rely on. The really nitty-gritty details are in italic text, and I’ve tried to make them skippable.

Like this!You can find links to the source code in the margin.

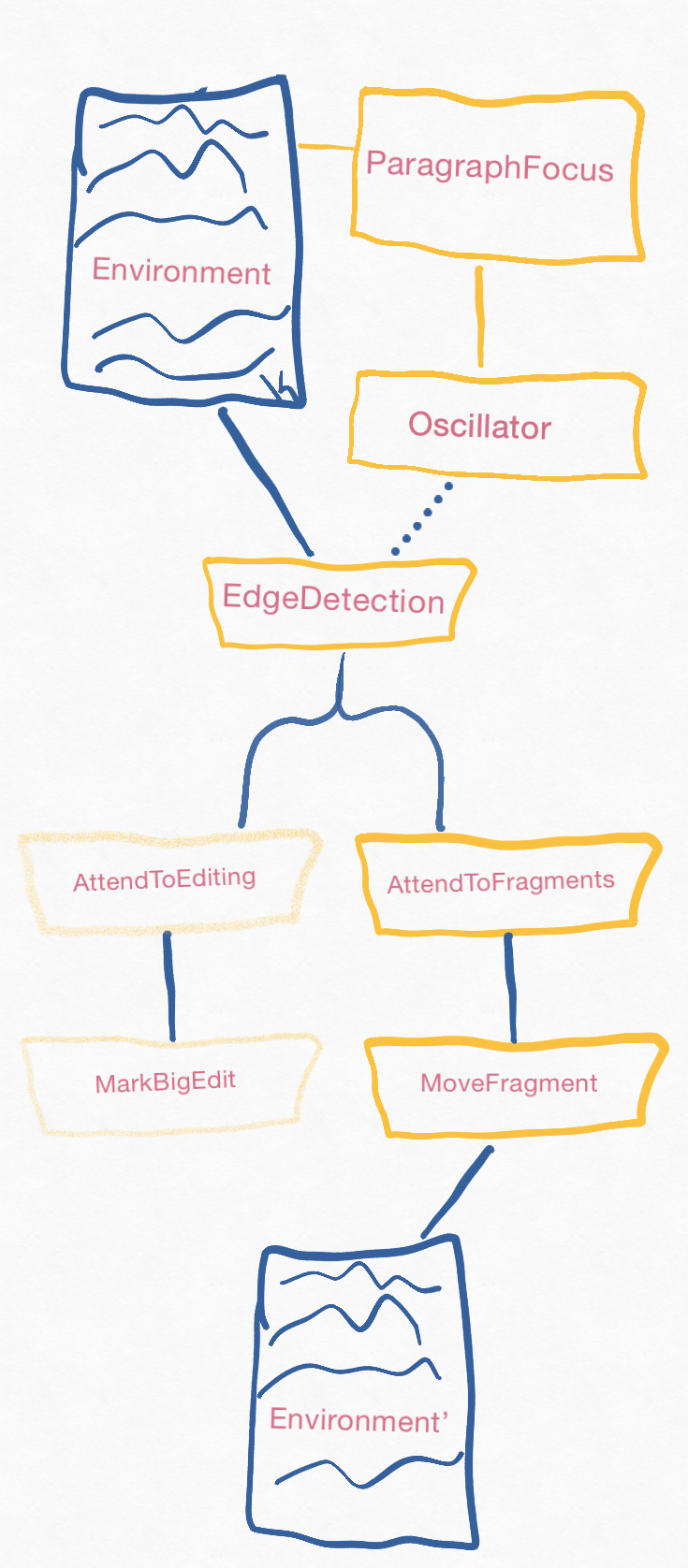

Control flows through these these modules or 'neural clusters'

Control flows through these these modules or 'neural clusters'

This explanation assumes you’ve read the previous description of “Moving a fragment in prototype 2” and “Just enough Elixir for the prototypes”.

Prototype 2 is run via the mix run --no-halt command. This is the result, with apologies if you’re trying to read this on a phone or some other small screen:

app_animal/paragraph_focus focus on a new paragraph

paragraph_focus/environment has '_☉☉__\n\nfragment\n\n___'

neural/oscillator

neural/oscillator tick!

perceptual/edge_detection edge structure: ■_■_■

control/attend_to_fragments looking for fragments in ■_■_■

motor/move_fragment will remove fragment originally at 5..12

motor/move_fragment paragraph is now "_☉☉__\n\n___"

motor/move_fragment stashed fragment "\nfragment\n" off to the side

neural/oscillator

neural/oscillator tick!

perceptual/edge_detection edge structure: ■_■

control/attend_to_fragments looking for fragments in ■_■

control/attend_to_fragments nope

The names down the left-hand side are abbreviated names of Elixir modules, translated into their corresponding filenames. (I prefer to read them that way in diagnostic output: because the right-hand side is more important, I don’t want the module names ToBeShouty. I have my quirks.)

Let’s work through how each of those modules is started and what they do.

Shifting the focus

Initialization modules

Initialization modules

For the moment, the entirety of the app-animal’s environment is a single paragraph, initially represented as a string and an editing cursor.

I imagined the editing cursor entering a new paragraph, which would cause an entire collection of modules to focus on that paragraph. The focusing is done by ParagraphFocus.

ParagraphFocus

ParagraphFocus takes a string and a cursor position and wraps them in a process. (Remember that Elixir code can only change state via a process.) That process is described by the module Environment.

Environment

OscillatorThe environment is displayed like this:

_☉☉__\n\nfragment\n\n___

The ☉☉ isn’t part of the text; it shows where the cursor is.

Later processes – perceptual and motor – interact with the paragraph directly, though it now seems to me they ought to be guided in some way by the ParagraphFocus.

Processes interact with other processes via messages. The most basic way of sending a message uses the destination’s pid, which is a unique value created when the destination process was. Pids are unique (even among processes spread out among various cores). Being essentially pointers, they normally have to be passed to code that wants to message the process they point to. However, you can also give the process a particular name that any code can use. It’s common to use the module itself as the name, which I did for Environment. So the Environment process is basically a global variable. I know, I know: “globals, ick!” However, it seems appropriate for the, you know, physical world. It’s maybe even appropriate for processes “inside the brain”, like ParagraphFocus itself. After all, neurons send their signals to fixed, known locations. (That changes over time, but not on a timescale like the one we’re simulating here.)

I’ll be rethinking this use of global names.

ParagraphFocus also starts the Oscillator to send a message to EdgeDetector every few seconds. That’s biologically plausible in that the brain does have oscillators, though I know little about them.

That’s easy to do. You can ask the Erlang virtual machine to send a message after some number of milliseconds:

Process.send_after(self(), :tick, millis)

Then the receiving process (in this case, the Oscillator itself) does what it does and sends itself another delayed message.

Perception

Perception

Perception

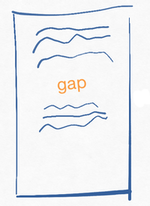

EdgeDetectionIt occurs to me that EdgeDetection isn’t such a great name, as what it really does is summarize the spaces between edges. That is, it converts a scenario like this:

… into this data structure:

[text: 0..30, gap: 31..33, text: 34..59]

… which is represented in the output like this:

perceptual/edge_detection edge structure: ■_■

The Elixir structure uses two data types I haven’t shown before. The first is a Range written in the form first..last. Note that the range includes the last element; I’m more used to ranges that exclude it. The second is a List, indicated by the square [] brackets. But it’s a special kind of list, a keyword list, which collects (in the Keyword module) conventions for working with a list of two-element tuples where the first element is an atom. The same list could be written like this:

[{:text, 0..30}, {:gap, 31..33}, {:text, 34..59}]

The Keyword module provides an interface that’s something like a Map or dictionary, except it accounts for how keyword lists can have duplicate keys. (And fetching a value is linear in the size of the list, rather than approximately constant as with a Map.)

Keyword lists are frequently used to provide named arguments to functions, most usually in a way that allows for default values and arguments supplied in any order. Because I rather like the fixed-order named arguments of Smalltalk and Objective-C, I’ll often write my function definitions like the function that launches the Oscillator:

def poke(module, every: millis) do...

That’s a syntactic variant of this:

def poke(module, [every: millis]) do

…or, for that matter, this:

def poke(module, [{:every, millis}]) do

In any of those cases, pattern matching binds millis to the passed value in a call like this:

Oscillator.poke(EdgeDetection, every: 5_000)

That’s a pretty idiosyncratic style, though, I think.

In the brain, the visual image (or some processed version of it) flows into an edge detector. Without using or implementing something like reactive extensions, it seemed I’d have to use a getter to fetch the paragraph text. But, for no very good reason, I chose a different approach.

The code to process the paragraph text lives inside one main function, edge_structure. I won’t describe it, but I added some comments that might help you:

def edge_structure(string) do

# Three passes over the string, whee!

parts = decompose(string) #=> ["text", "\n\n", "text"]

labels = classify(parts) #=> [:text, :gap, :text]

ranges = ranges(parts) #=> [0..3, 4..5, 6..9]

List.zip([labels, ranges])

end

The nearby decompose function gives me an excuse to describe more Elixir. Its base structure is this:

def decompose(string) do

string

|> String.split(...)

|> Enum.reject(...)

end

The |> symbols are just syntactic sugar for function composition. That is, I could write it like this:

def decompose(string) do

Enum.reject(String.split(string, ...), ...)

end

People who read from left to right, top to bottom, think time flows the same direction, and the “pipeline” notation supports that.

The call to String.split shows two things:

|> String.split(@gap_definition,

include_captures: true)

On the second line, you can see the use of a keyword argument. (The :include_captures argument is optional; leaving it out reverts to the default behavior.) The first line shows the Elixir way of writing a constant: @gap_definition. That was defined earlier in the module as a regular expression:

@gap_definition ~r/\n\n+/

That’s actually a module attribute, not a constant. It’s available to Elixir code at runtime, even from outside the module. Attributes allow a number of neat tricks, which I might show someday, but not today.

Rather than using a getter to fetch the paragraph text and then using decompose on it, I send decompose to the Environment process, ask it to run the function, then return the result. That looks like this:

Note that I’m being cutesy – perhaps too cutesy – here. That summarize_with: ... bit is a keyword list. (You can omit the square brackets if it’s the last element in the argument list.) It’d be more idiomatic to use the tuple {:summarize, ...}, but the English major in me likes the way the keyword argument reads.

paragraph_shape =

GenServer.call(Environment, summarize_with: &edge_structure/1)

I don’t have to write different getters as the Environment becomes more complicated.

GenServer.call was explained in the earlier summary of Elixir processes and messages, but the funny &edge_structure/1 may need a little explanation.

In the jargon, Elixir is a “Lisp 2” language. In such a language, there’s a different namespace for function names and variable names. In such a language, you can type the following with no problem:

max = max(1, 2)

To the compiler, that means call the function max and bind the result to the variable max.

In a “Lisp 1”, there are only variables. So the above code would mean:

- Evaluate the variable

max. - Apply the resulting function (it better be a function!) to the arglist

[1, 2]. - Bind the result to the variable

max. - Oops, you just turned

maxfrom a function to the number 2. Are you sure that’s what you wanted?

I first learned a Lisp 2 (Common Lisp) and only later a Lisp 1 (Scheme). I was scandalized by the above problem, but it turns out it hardly ever happens, even in a language that doesn’t have compile-time type checking.

Nevertheless, Elixir (and Erlang before it) is a Lisp 2 Actually, I think it’d be better to call it a Lisp N, as each module is its own independent function namespace. In Elixir, you can’t define a named function outside of a module, which is mildly annoying when working in the interactive shell.. That means it has to confront a modern Lisp-2 problem: what if you want to bind a function to a variable? There needs to be some way to refer to a function as a value, not as something you’re immediately calling. In Elixir, that’s done with the “capture” notation:

&max/2

(The /2 in &max/2 indicates that we’re referring specifically to the max that takes exactly two arguments. In Elixir, a max that takes, say, a single list of numbers is max/1, a completely different function, sharing nothing but a name with max/2.)

But if there’s a special notation for function-as-value, there has to be a special notation for apply-value-as-function, which is shown in Environment’s implementation. Note the period after f on the second line:

def handle_call([summarize_with: f], _from, paragraph_state) do

result = f.(paragraph_state.text)

{:reply, result, paragraph_state}

end

In the podcast and previous blog posts, I’ve emphasized Elixir processes as models for self-sustaining, circular, neural firing patterns like the one that gradually becomes “convinced”, sniff by sniff, that a rabbit really does smell a carrot.

However, there are lots of neural structures that aren’t circular: input comes in one side, output goes out the other side, and that’s it. They are, essentially, pure functions.

My tentative names for the two types of neural structures are “circular cluster” and “linear cluster”, but I don’t use them in code yet. I could just have had the Oscillator call edge_structure as a function and not worry about all this “process” foofarah.

However: EdgeDetection is going to pass what it discovers onto the control modules AttendToEditing and AttendToFragments, which may in turn send commands to MarkBigEdit and MoveFragment. In general, there’s no telling how long such downstream processing might take, and there’s no reason for EdgeDetection to wait around for it to happen. It should “fire and forget”.

Elixir allows tasks to be started such that the starting process can – indeed must – await the results (presumably after doing some work of its own as the task is running – else you’d just call the function synchronously). I don’t use tasks that produce results. I start tasks such that the creating process can and usually does exit immediately.The appropriate Elixir mechanism for that is the Task, which wraps a function and runs it asynchronously. I’ve abstracted tasks into a WithoutReply module, so that EdgeDetection starts its “downstream” modules like this:

WithoutReply.activate(@summary.downstream,

transmitting: paragraph_shape)

Starting a task is straightforward:

defp activate_with_args(module, arglist) do

runner = fn -> apply(module, :activate, arglist) end

Task.start(runner)

end

This shows the two namespaces in action. There’s a freestanding function bound to the variable runner that’s passed to Task.start. There’s also a manual function call. Kernel.apply – which can be abbreviated apply – looks for the function named :activate attached to the given module and then calls it on the given arglist. (Note that it counts the arglist to see which of activate/1, activate/2, and so on to call.)

Decisions

EdgeDetection provides data to two downstream control processes. Neurons are very fond of sending results to many recipients.

Harvard University says that the 86 billion neurons in your brain have roughly 100 trillion connections, meaning each neuron is connected to over 1000 others.

Decisions

Decisions

One of those control processes is Control.AttendToEditing, whose job is to say, “Say! I see two :texts separated by a :gap. That’s a Big Edit. I will instruct Motor.MarkBigEdit to do that thing it does.” (It doesn’t do anything yet, so I’ll skip MarkBigEdit for the rest of this post.)

AttendToFragmentsThe other process is Control.AttendToFragments. It, again, is a fire-and-forget task. It checks for a [text: gap: text: gap: text:] pattern. If it sees it, it starts up the Motor.MoveFragment task. Again, all these tasks are asynchronous. AttendToFragments doesn’t wait on MoveFragment, nor is anything waiting on it.

The key thing here is that any of these simpler tasks is a link in a chain. Each has two options:

- It can activate another “neural cluster”, telling it to do whatever it is that it does, perhaps passing along a little data, or…

- It can simply do nothing, which means the entire chain disappears, having done nothing.

This is something vaguely like continuation-passing style in programming. See Wikipedia. I also rather like my explanation in chapter 10, part 2 of Functional Programming for the Object-Oriented Programmer, which I made free a few years ago.

Movement

Movement

Movement

As explained earlier, EdgeDetection is a task that uses synchronous GenServer.call to pass a summarizer function into the Environment. The result of that function is returned to EdgeDetection.

MoveFragmentWhen the motor process MoveFragment is activated by AttendToFragments, it too communicates with Environment – but to send it an updater rather than a summarizer. It uses the _a_synchronous GenServer._cast_ to send the updater. The Environment applies the updater to its own state, gets a changed state back, and stashes that away.

I won’t describe the updater function because the previous post went through what it does – a combination of perception and action – in some detail. There’s a mildly interesting mismatch of data, though. The Environment is only prepared to accept a function that takes a single paragraph_state argument. But that function needs to know some information passed to MoveFragment when it was activated: specifically, the range at which the fragment is expected. If you’re not familiar with heavily functional languages like Elixir, you might be unfamiliar with the typical trick, which is a function-making function. Here’s an outline of my function-maker:

def make_paragraph_updater(original_fragment_range) do

fn original_paragraph ->

# code that works on both `original_fragment_range`

# and `original_paragraph`.

end

end

It is the return value of make_paragraph_updater that’s given to Environment.

The technical term for the returned function is a closure. I first learned of closures in the early 1980s, when some of the Lisp literature referred to them as “full upward funargs”. I find that rather charming in that old-fashioned, always-on-the-edge-of-frivolity Lisp way. That’s done like this:

updater = make_paragraph_updater(original_fragment_range)

GenServer.cast(@summary.downstream, update_with: updater)

On the Environment side, there’s the usual dance: get the current state, generate a new state, return the new version to the runtime:

def handle_cast([update_with: f], paragraph_state) do

{:noreply, f.(paragraph_state)}

end

Next up

One of the following:

- Right now, I have a single demonstration that’s basically hardcoded. I really should have more examples, including at least one that shows the fragment-moving process giving up. Say! Those examples could be end-to-end tests! But that means I have to build a test-or-demo-only mechanism that controls the ordering of asynchronous messages and doesn’t take instructions like “send this message in 5 seconds” seriously.

- As described in the previous post, I need to implement a pathway that marks the paragraph as being in the process of a

BigEdit. I choose to add the (realistically unnecessary) complication that the control module shouldn’t redundantly mark an already-marked paragraph. That will, for the first time, require me to write a perceptual cluster that handles two different, asynchronously-delivered upstream inputs. - I’m starting to get a sense of different kinds of processes the app-animal will use (to be built on top of Elixir’s

GenServerandTask). For example, I have control gates and motor system movers.EdgeDetection'sway of pretending it’s getting a steady flow of information from upstream perceptual clusters, when it’s actually implementing a fancy getter, deserves its own name. TheMarkAsEditingpathway will probably need something that smooths out temporary inconsistencies in input, something that Andy Clark says is a significant role for the perceptual system. (He seems to be of the opinion that the brain follows the sailplane pilot’s maxim: “don’t just do something, sit there.")

But first, I ought to get another podcast episode out. I’ve been concentrating too much on code, too little on The Sociology of Philosophies.