This prototype implements a strategy different from that given in the podcast episode. The strategy was in part inspired by how prototype 1 was too hard. In contrast to that one, this seems something worth building on, though issues remain. I’ll show and explain the code in a separate post.

Keywords: scanning, fail fast, surprise, brain as prediction engine

I still haven’t gotten around to implementing comments, so in the meantime, you can reply via the Mastodon post or via email.

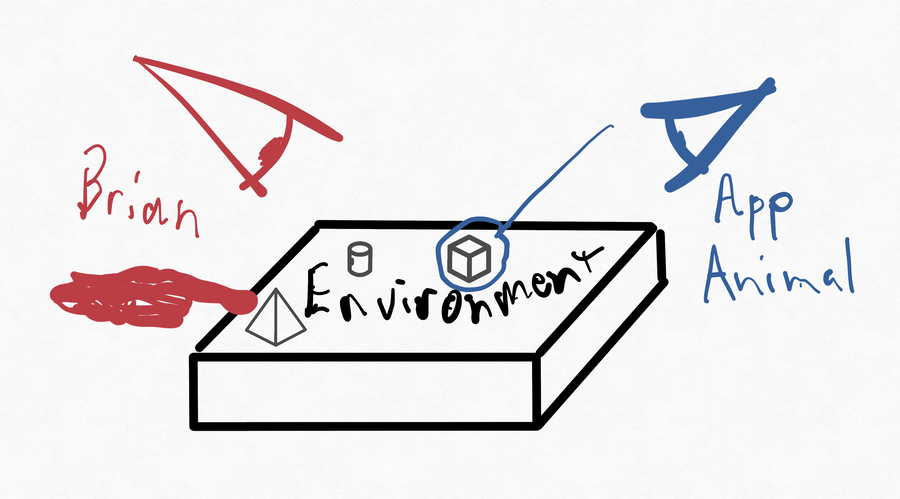

Brian and the app animal independently see and manipulate the environment

Brian and the app animal independently see and manipulate the environment

Highlights:

- I’m more explicit about dividing the app-animal into a perceptual system, a control system, and a motor system. Except that the motor system ends up independently managing some perception.

- I favor occasional scanning of the environment over a continual focus on a part of it.

- As with Chapman and Agre’s Pengi and the predatory “bee wolf” wasps, plans are not explicitly represented. Rather, a step in a plan consists of both an activity and the “planting” of a future affordance. If that future affordance isn’t observed after the activity is finished, the remainder of the (implicit) plan just plain doesn’t happen. It’s not dropped – because it was never “held” in the first place.

- I differ from Pengi (though not, perhaps, from the bee wolf) in that receptivity to a particular affordance isn’t “always on” but is turned on as part of triggering a motor action.

- Something of a recent(?) trend in neuroscience is to treat the brain as a “prediction engine” where the perceptual system is primed to expect certain perceptions, so that what’s passed back to the control system is neither the actual perception, nor the expectation, but perception shaped by the expectation. I’m dipping into that.

- Either the books I’ve read or the way I read them left me informed about the control system, much less about the perceptual or motor systems. Clearly I need to do more reading.

A metaphor

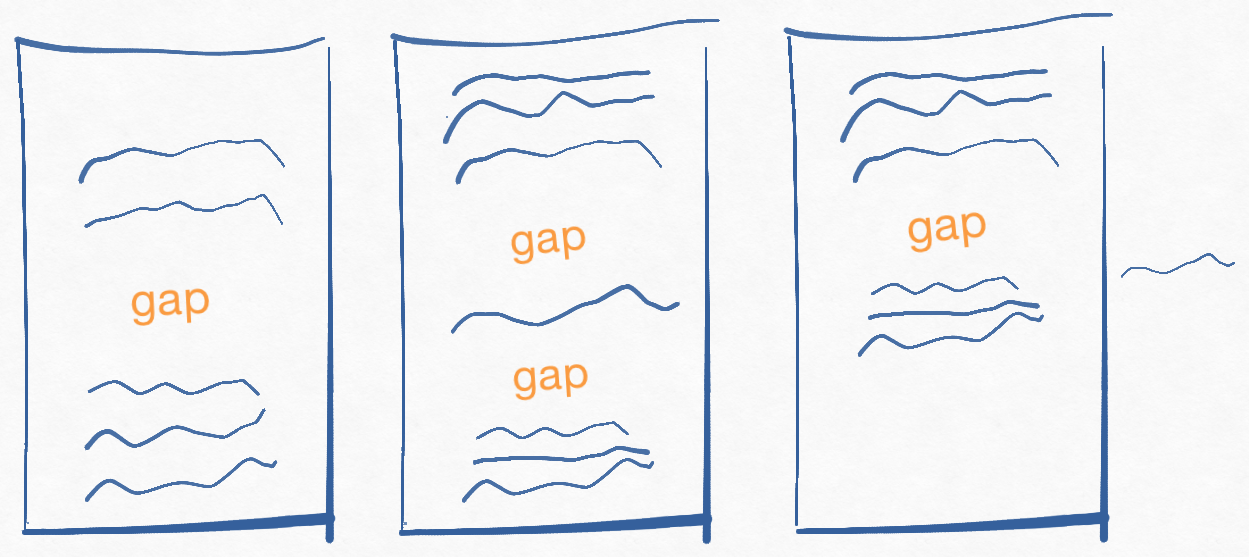

Below I show three stages in the editing of a single paragraph:

In the first picture, the user (“Brian”) has started a Big Edit by moving the cursor into the paragraph and typing two or more newlines, leaving what I call a gap. From that point, he adds more lines before the gap, trying to improve the paragraph.

In the second picture, he’s “cut loose” another chunk of paragraph text by backing up before it and adding two more newlines. The now-isolated text is called a fragment. In the normal course of fussing with a paragraph, Brian will often accumulate enough fragments to push the second part of the paragraph off the screen, which is annoying.

Therefore, the app-animal is to “grip” the fragment, “lift” it out of the paragraph, and “stash” it somewhere associated with the paragraph. The result is the third picture. The fragment off to the side will eventually grow into a list of fragments, if Brian keeps creating them. Visually, Brian will likely see those fragments hovering to the right of the main text-editing area, though that’s not part of this prototype. Notice that the text has closed up so that the whole paragraph is visible and Brian’s work can be focused.

However, the app-animal – like animals in nature – deals with an environment that doesn’t stay still while it’s gripping, lifting, and stashing. I declare that Brian might have continued to edit the paragraph between the time the app-animal noticed the fragment and the time it gripped it. In actual fact, it’d be almost impossible for Brian to finish typing even a single character before then, but let’s run with the idea.That’s a problem because:

- The app animal perceived a fragment at a particular location.

- It instructed its motor system to move its “hand” to that location and grip what’s there.

- By the time the hand got there, new characters added before the fragment moved it so it’s not where it’s supposed to be.

What should happen?

A simple, general, and biologically plausible solution is: nothing. The hand draws back due to the surprise. I liken this to the way a hand draws back from a hot stove without any signal from the brain: the reaction to the perception is controlled by the spinal cord. Going with the metaphor of the brain as a prediction engine, we can say this:

- When we think of the mechanism behind a hand jerking back, it’s not so useful to think of a perceptual subsystem (receptors for heat in the skin) that happens to be housed in the same structure as a motor subsystem (neurons that control muscles), with the two acting independently, orchestrated by an exterior control system.

- Rather, let’s think of a combined perceptual/motor system that has been hard-coded to autonomously react to pain.

- Taking that a step further, recall my earlier meditation on how it is I reach for a can of LaCroix™ fizzy water without looking at it. I supposed an autonomous subsystem that replays a rehearsed pattern of movements, regulated by expectations of what proprioceptive perception will reveal. (For example, the surprise of hitting a wall halfway there would “throw an interrupt”, provoking a supervisor process to deal with the exception.) The difference from the pain example is that the can-gripping integrated subsystem isn’t hardcoded (and permanent) but rather created on the fly for a particular purpose.

- A similar surprise would happen if my hand reached the end of a movement, gripped the can, and it felt wrong. (I’ve noticed this happening when it turns out I’d put the can down oddly rotated. The unconscious lifting-to-my-mouth next action is interrupted and I have to at least semi-consciously attend to rotating the can.)

- So my analogy is that the grip-lift-and-stash movement is packaged with an autonomous perception-plus-reaction such that if the grip “feels wrong”, the whole rigamarole just stops. (I’m defining “feels wrong” so that a little shifting of the fragment – less than half its full length – doesn’t feel wrong. More about that later.) This motor-ish subsystem can just stop, instead of searching for what happened to the fragment, because the app-animal is still periodically scanning the paragraph. Presumably it will again notice the fragment, now in its new location. Or, if Brian has deleted one of the gaps, there won’t be a fragment any more. Or, if Brian has made an additional fragment, one will be removed in one scan and the other in the next one.

It’s probably worth emphasizing that stopping the current action doesn’t require running any “undo” code. Rather, a function simply exits instead of continuing. That’s in keeping with inspiration from Pengi.

Turtles all the way down

This implementation of fragment handling first atomically scans the paragraph to look for the fragment. Moreover, the grip, lift, and stash operation – including the check to see if the fragment has moved – is also atomic.

This is cheating.

A scene can change as your eyes move over it. When you grip an object in the world, someone else can grip it at the same time, preventing you from lifting it.

Now, the “give up and try again from the beginning if you notice anything odd” approach could be applied at a smaller granularity. Here’s how.

First, let me enumerate the ways a “grip” action becomes uncomfortable:

-

The point of gripping lands on text not within the fragment (determined by checking that there’s still a gap on both sides). This allows a fragment to move up to around half its length. The motivation is that if you grab a stick on the ground, you’ll go for its center of mass. Unless you’re picking it up to use as a club. Or to poke at something out of your reach. Or one end is closer to you than the middle. Look, just give me this one, OK? If what you hit doesn’t feel like a stick, you’ll react with surprise. But it doesn’t matter if you don’t grip exactly the center of the stick.

-

If the fragment has changed its size, or if the editing cursor is within its bounds, that’s weird: Brian is messing with it at the same time as the app-animal. Let Brian have it; come back to it later. (The next scan will adjust the expected size.)

Because the grip-lift-and-stash sequence is atomic, if “grip” succeeds the last two steps can assume no change to the fragment.

I could split the compound action into three atomic sub-actions, which would produce this sequence of events:

-

grip: this checks only that the fragment can be felt. (Check 1 above.) The result of the grip action is an updated notion of where the stick is. This atomic sub-action finishes, giving Brian a chance to mess with the paragraph.

-

After some time, the affordance of “feeling” a fragment being clutched will initiate the attempt to…

-

lift: The check for a moving fragment is repeated. The checks for a changed size or presence of the cursor are also made: “Hey! I feel someone pulling on my stick!” If the fragment feels dodgy, it’s released. (The function

lift_fragmentwill exit without installing an affordance that will provokestash_fragment.)Lifting cuts the fragment out of the text of the paragraph and puts it somewhere else. (Physical analogy: it puts it off to the side in some sort of staging area).

-

The affordance of “seeing” a fragment in the staging area will cause…

-

stash: Since (I’ll assume) the staging area is inaccessible to Brian, there need be no check before the fragment is put into its permanent home (a list visible to Brian, one he can manipulate).

All that seems easy enough, but there’s a race:

-

The whole grip-lift-stash sequence is started because a perceptual process noticed the paragraph had a particular pattern of gaps and text.

-

Suppose the grip process starts and finishes without noticing anything funny about the fragment it grips.

-

The perceptual process happens to run again and initiates another grip-lift-stash sequence before the first one completes.

Now, this is probably OK. The grip from the second grip-lift-stash is likely to discover that the fragment is missing and exit without making any change to the environment. Or, if it does grip the fragment before it’s moved, the lift will discover that what’s been gripped is now gone. Or… etc.

I need to think this through, maybe invent something (or some things) akin to optimistic locking but biologically plausible. I also need to do some research on how brains integrate different forms of perception (like vision and touch).

Direct control links were explained in episode 41. They are neural connections between perception and motor neurons such that a particular perception (or affordance) causes a motor action with little to no processing along the way. For example, a direct control link between a fly’s feet and its wings means that the feeling of no longer standing on a surface automatically starts the wings beating. Still: baby steps. The next iteration of this prototype will finish up a second direct control link: signaling that a Big Edit is in progress. It’s already partly implemented, but finishing it will force me to solve a simpler version of the above problem.