The architecture of the “app animal” prototype has settled down enough that it’s worth describing in a semi-technical way. This post is about the core building blocks. The next about what’s built with them. Later posts will dive into the Elixir code in a way I hope is friendly to Elixir beginners.

The architecture is all about asynchrony. Heavily asynchronous code is notoriously hard to get right. That’s going to be worse for the app animal, which will have many pieces interacting asynchronously. In a way, the whole point of this project is to find a way for a mere human to be able to design the kind of asynchronous machine that biological evolution successfully groped its way toward.

Clusters

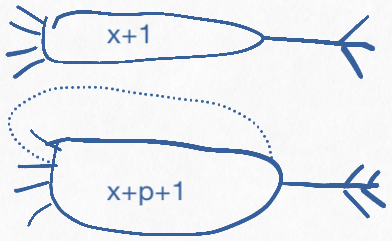

There are two sorts of clusters, pictured on the right. You can find definitions of terms in JARGON.md. Links in italicized text lead to the jargon file or, sometimes, to the source.

Linear clusters receive an asynchronous pulse that can carry any Elixir value (typically a number, small map, or small list). Think of the cluster as a bundle of quiescent neurons. When it receives a pulse, the cluster calculates some function. It will usually send the resulting value down its out edges to downstream clusters as an outgoing pulse.

The downstream clusters are hardcoded (no new clusters are created once the system starts). To make the analogy to biological clusters stronger, clusters “know” neither their upstream nor their downstream neighbors: a pulse comes in, from who knows where, and another pulse goes out, to who knows where.

A linear cluster is implemented as a transient Elixir process. It is launched as a Task, meaning that it runs a function and then exits. (By analogy, the neurons in the cluster go quiescent and revert to their baseline metabolism, burning as little glucose as possible.)

An upstream cluster receives no return value or callback from its downstream. (If the upstream is another linear cluster, it’s almost certainly already gone quiescent anyway.)

Defining a new cluster is deliberately low ceremony. Most often, you define it by plugging in a function. Here is a linear cluster that increments:

The & notion is a shorthand way of defining an anonymous function. Parameters are numbered, rather than named, so &1 refers to the first parameter. The function shown is equivalent to fn arg -> arg + 1 end.

C.linear(:first, & &1+1)

The :first argument is the name of this cluster within its network (about which, see the next post). The second is the calculation it performs.

Clusters don’t have to generate pulses. If the function returns the special value :no_return, nothing is sent downstream. This can be used to implement a gate:

C.linear(:second, fn input ->

if input < 0,

do: :no_return,

else input

end)

The cluster-maker function, linear, is an ordinary function that produces an Elixir struct.

A struct is, essentially, an Elixir Map or key-value store with a set of predefined and required keys. All instances of a struct share a name. linear produces structs named AppAnimal.Cluster.Linear. It’s customized by wrapping it in a façade function. For example, the gate function simplifies creating such clusters:

C.gate(:second, & &1 >= 0)

gate takes its function argument and adds in the if test and the return value:

def gate(name, predicate) do

f = fn pulse_data ->

if predicate.(pulse_data),

do: pulse_data,

else: no_result()

end

linear(name, f, label: :gate)

end

The label on the last line just adds a little logging information, as shown in the second line below:

summarizer gap_count 2

gate is_big_edit? 2

forward_unique ignore_same_edit_status 2

Whereas linear clusters in a sense “go away” between pulses, circular clusters persist and retain state. They are analogous to biological clusters that send pulses back to themselves as a “keep alive” signal.

So a circular cluster might operate on both the pulse value and the stored state. It might produce a pulse for downstream clusters, it might change its state, or both.

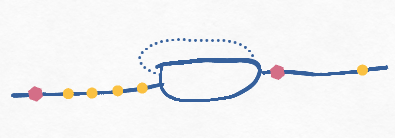

An example of a circular cluster would be one that forwards pulses, but only when they have a different value from the one last forwarded. Like this:

To do that, it must store the most recent value between pulses. The core of such a cluster’s function looks like this:

calc = fn pulse_data, previously ->

if pulse_data == previously,

do: :no_result,

else: {:useful_result, pulse_data, pulse_data}

end

On the first line, you see the pulse data and the stored state (named previously). If the new pulse is the same as the previous one, there’s nothing to send on, and the stored state doesn’t have to be changed. But if it differs, it should be sent on and the cluster should remember it.

Throbbing

Consider the above “forward only unique values” cluster. What happens when it stops getting pulses, perhaps because the animal’s attention has shifted elsewhere? Given that the biological brain is notoriously stingy with resources, we should – by analogy – want to shut down the circular cluster fairly quickly. Who does that? How?

In the brain, one cluster can shut another down (which we’ll see next post). But a cluster will also shut itself down, by “getting tired” — letting its own reinforcement pulses die out. It then goes quiescent until some outside pulse revives it.

In the app animal, there is a timer process that periodically tells clusters to “throb”.

The implementation uses a central timer because it makes it easy to “speed up the clock” for tests; otherwise, I’d have each cluster use Elixir’s send_after to pulse itself.

On each throb, an uncustomized circular cluster will decrement its current_strength value, and instruct the Elixir/Erlang runtime to stop it after the value reaches zero.

However, a cluster can be customized to increase its strength whenever it receives a (non-throb) pulse. The “uniqueness filter” cluster is one such, so it will remain alive as long as it receives values frequently enough.

Alternately: if the outside world doesn’t constantly reinforce that circular cluster, it will quickly stop itself and quit consuming resources.

But wait isn’t that a bug?

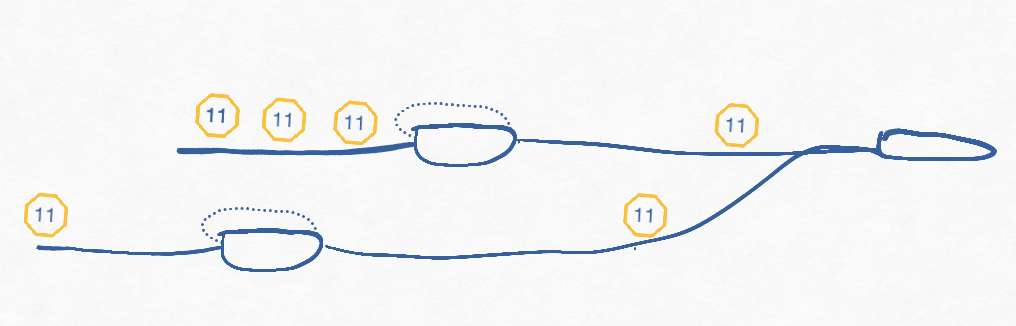

Suppose there’s (say) a stream of 11, 11, 11 pulses to the uniqueness filter. Only the first 11 was passed downstream. But now the stream pauses, so the uniqueness filter “ages out” and stops.

Now the stream starts again: 11, 11, 11. What happens?

The first 11 in the new stream will activate the uniqueness filter cluster. That filter retains no state from its previous activation. Retaining state would require some active cluster to expend energy, which would defeat the purpose of aging out, which is to save energy.

Therefore, the newly-revived cluster will send an 11 downstream, even though – from the point of view of downstream clusters – it’s violating its contract by sending a duplicate.

What this reveals is that the purpose of the uniqueness filter isn’t correctness but rather energy efficiency. It is not protecting the downstream clusters from duplicate values; it’s protecting them from being frequently activated again and again with the same value. But an occasional duplicate activation has to be OK.

In the case that inspired the uniqueness filter, that cluster is part of a chain of clusters that (in the real implementation) would paint a paragraph’s border some unobtrusive shade of red. The filter is to keep the app animal from painting the border red, then doing it again, then doing it again, then doing it again,… If you take the analogy of an animal moving about in the world, such obsessive, pointless repetitions would be far too much of a waste.

However, occasionally doing redundant work is not going to be a big deal. You may remember that, in my interview with David Chapman, I described a situation in which a wasp creepily does unnecessary work in response to a repeated affordance – but only when a researcher nastily moved its paralyzed prey in a way that would rarely if ever happen in nature.

So that’s two principles of asynchrony-tolerant design for an app animal. First, try to make the system something like idempotent. Whether a repeated operation that affects the world can ever be really idempotent is… well, it’s not something I care about. However, accept that you’ll fail, so design the animal so that its interaction with the world tolerates some pointless repetition.

This seems to me not horribly different from eventual consistency.

There’s another important (I hope!) method of dealing with asynchrony. Because explaining that depends on higher-level architecture, I’ll defer it to the next post.

Teaser: neurons are slow, compared to computers. But neurons are fast compared to muscles.