Here are the guidelines or principles or heuristics I’ll be using for early prototypes.

-

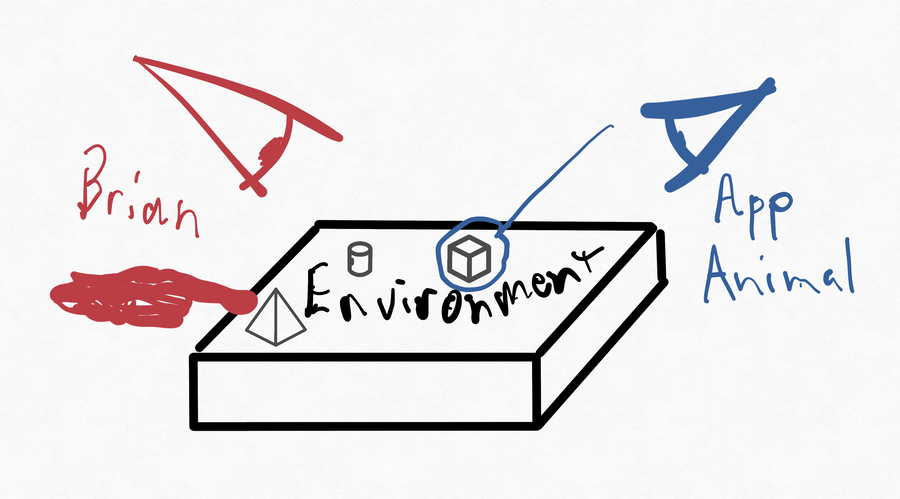

The app and the user (hereafter: “Brian”) are considered two independent (asynchronous) animals interacting via an environment. For the sample app, the environment is a document (in the broad sense).

-

The “app-animal” is divided into three systems. The perceptual system observes the environment, looking for new affordances. When one is seen, control is handed into the “control” system, which – typically – instructs the motor system, which changes the environment. This sequence is a direct control link, which is what I’ll be focusing on in early prototypes.

-

All modules are structured as a soup of actors that, ideally, communicate asynchronously. (Exceptions will come down to human weakness in managing complexity.) Prototypes will use Erlang processes. The app proper will use Swift actors, but they’ll be used as if they were as lightweight as Erlang processes. “Processes” is the term I’ll use going forward.

-

Perceptual processes will be indexical; they will be looking at something. Like, for example, a paragraph. I will tend to use spatial metaphors for the objects of attention. For example, I think of a script as a series of paragraphs or groups of paragraphs, laid out in a linear fashion.

-

Perceptual processes are created by control processes that are, metaphorically, saying “I want you to pay attention to that and look for your affordance there.” When the affordance is seen, it will start a control process specific to that affordance and then exit. (The app-animal may want to watch for repeated affordances, but it will do that by having a control module recreate an indexical perceptual process.)

-

Perceptual processes may maintain state when an affordance requires observing a sequence of changes in the environment. As with all processes, the state will be minimal.

-

Perceptual processes will “get bored” over time and go away. I will prefer that to explicitly shutting them down. (There will be a layering of perceptual processes that will enable both this and indexicality, but I haven’t figured that out yet.)

-

Control processes will be ephemeral. They will react to an affordance by (1) changing what the perceptual system is attending to, and/or (2) instructing the motor system to change the environment. For convenience, I’m going to think of the control process as telling the motor system “create a new affordance – that is, an opportunity for action – for the human.” (I’m not sure about this.)

-

“The world is its own best model.” – Rodney Brooks Because control processes are ephemeral, any information needed to respond to a later affordance must be stored in the environment. It can be retrieved in two ways. (1) A perceptual process may be fired to “keep track of it”. (2) When needed, a perceptual process will be started to scan the environment for the information needed. (In general, reacquiring information will be favored over keeping track of it.)

-

Ephemeral control processes also implies that plans are handled the way the Pengi episode explained: each step will leave an affordance in the environment that will prompt the next step.

-

For the most part, I want the app-animal to detect affordances by observing the environment. For example, it should notice when one paragraph is split in two with

two blank lines between them. But I'll probably first implement a key chord that means "starting my split-a-paragraph editing thing now" which will both split the paragraph and also send the affordance to the paragraph's watcher. That way seems a better first step. And recognizing some affordances may be too hard, so I'll settle for having to remember to signal my intent to the app-animal.